Remember those clunky text-based AI assistants from last year? Buckle up, because 2024 is all about multi-modal AI, the next frontier in education.

Imagine students learning history through VR simulations that respond to their questions, mastering languages with AI tutors adapting to their accents or exploring complex scientific concepts with 3D models analyzed by AI in real-time.

Sounds futuristic? It’s already happening! This week we’re exploring multi-modal AI.

Let’s dive in 🤖

~ Sarah

Unleashing Learning Magic: Multi-modal AI in Your Classroom

What is Multi-Modal AI?

Multi-modal AI refers to artificial intelligence systems that can process and understand multiple types of input, such as text, voice, images, videos, and more.

While traditional AI models have been proficient in handling singular modes of input, multi-modal AI takes things to the next level by integrating various forms of data such as text, voice, images, and even thermal data, to create intuitive and dynamic learning experiences.

Imagine a classroom where students can interact with AI-powered tools using not just text but also their voices, gestures, and even images. This opens up a world of possibilities for personalized learning, real-time feedback, and enhanced accessibility for students with diverse learning preferences and needs.

This is no longer science fiction; it’s the future of education, knocking at our classroom doors.

Impact

Multi-modal AI has the potential to significantly impact the education system. Here’s a glimpse of the possibilities:

- Personalized Learning: AI tailors content (text, images, videos) to individual student needs and learning preferences.

- Immersive Learning: Students explore VR simulations for deeper understanding and engagement.

- Accessibility & Global Learning: AI tools assist diverse learners and translate content, breaking down language barriers.

- Automated Assessment: AI provides instant feedback, saving educators time, aiding student progress, and opening up more opportunities for gamified learning.

- Real-world Applications: Students engage with simulated scenarios for practical learning.

- Enhanced Communication: AI facilitates multi-modal interactions between students, teachers, and parents.

Example

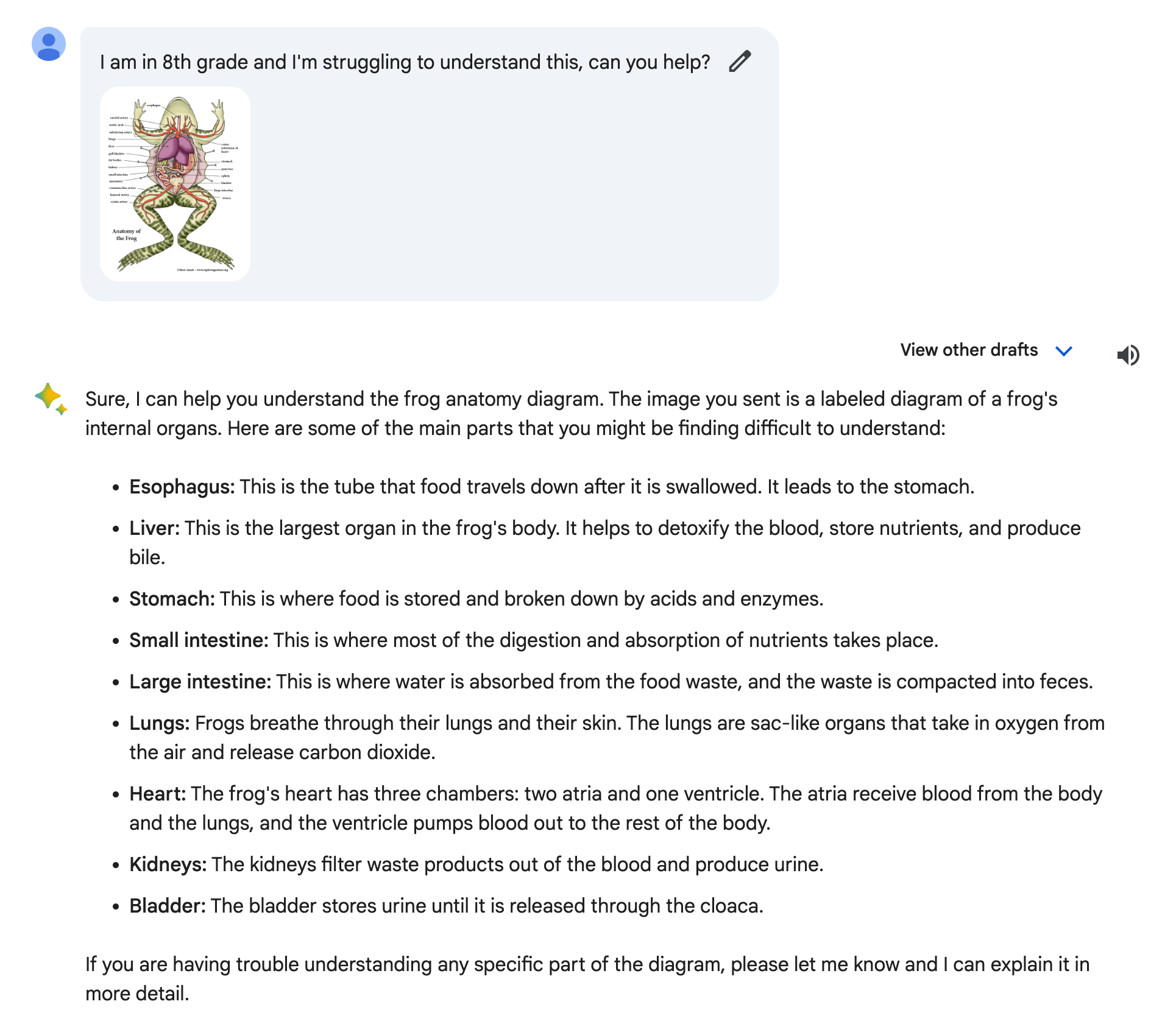

Imagine students being able to upload textbook pages for in-depth explanations.

I uploaded a diagram of the inside of a frog to Google Bard, and it was able to identify and explain the different parts of a frog without any additional context.

Empowering Educators

As educators, you’re at the forefront of this AI revolution in education. AI is here to amplify your expertise, not replace you.

By embracing multi-modal AI tools and platforms, you can create dynamic and engaging learning experiences that cater to the individual needs of your students. Whether it’s using voice-activated assistants for hands-free lesson planning or leveraging image recognition technology to enhance visual learning activities, the possibilities are endless.

Use AI tools to automate repetitive tasks, personalize learning, and provide real-time insights into student progress. Imagine the time you’ll save for deeper connections and individualized support!

Some Tools to Try

➟ GPT-4v: OpenAI’s ChatGPT has been upgraded to have multimodal capabilities. GPT-Vision, an extension of this paid version of ChatGPT, possesses the capability to interpret visual data such as hand-drawn sketches.

➟ Meta’s ImageBind: This trailblazing model uniquely combines information from six different modalities: images, text, audio, depth, thermal, and IMU data.

➟ Google Bard: With Bard’s latest update you can now generate images in English in most countries around the world, at no cost.

➟ Magic School: An AI assistant for educators.

Looking Ahead

This is just the beginning. As AI evolves it will continue to shape the future of education in profound ways.

By staying informed about the latest developments in AI technology and actively exploring how it can be integrated into your teaching practice, you can empower your students to thrive in an increasingly AI-driven world.

—

Thank you for all you do as an educator and STEAM advocate.

– The DMA Team

There are 3 ways we can help your school with your STEAM Programming:

🌍 Bring Students to Global Innovation Race on-campus at Stanford University (July 17-27th)

Fuel your students’ passion for technology and innovation while future-proofing their skills with the summer experience of a lifetime! Global Innovation Race is an international call to action for student groups (aged 13-17) across the globe to design solutions that will shape the future. Space is limited. Learn more here!

🌐 Join Leading STEAM Schools in the World

Developed by Stanford-affiliated educators and researchers, Leading STEAM Schools in the World (LSSW) Membership helps schools and educators meet the ever-evolving needs of 21st-century learners. Membership is customizable, combining comprehensive curriculum, tailored consultation, and a supportive global community of like-minded schools.

💡 Become a STEAM Innovator Accredited School

Our STEAM Innovator School Accreditation recognizes and promotes excellence in STEAM education worldwide while empowering educators and schools to achieve the highest standards of quality and innovation in STEAM curriculum, instruction, and assessment.